Hello, I'd like to ask the following: I created a Python script for automatically entering values into tables in Marc Mentat from a CSV file. This CSV file contains 500 columns, and each column has exactly 50,000 rows of values. I only import the columns into Marc Mentat. Every time I run my Python script, the memory usage of MentatOGL.exe in the Task Manager starts increasing rapidly (about 1 MB/s during the input process). I start at around 2900 MB, and once it reaches approximately 4220 MB (which is around the 17th table), it stops adding values and the memory growth slows down from 1 MB/s to about 0.1 MB/s, effectively causing it to freeze. Can you advise me on why this is happening and how to fix it?

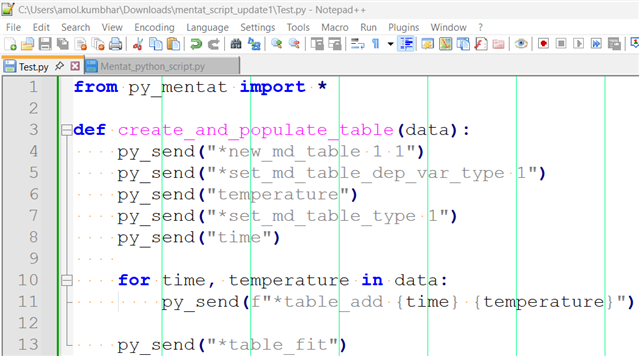

I am attaching my Python script with the modified table.mentat_script.rar