1 Introduction

Why our process is not capable? This question is often asked to our consultants around the world. Unfortunately, there is no easy answer. Very often not capable process is due to 4 different categories:

- Poor MSA

- Meta data associated with parts

- Outliers – based on data quality

- Outliers – based on technology

All of these categories are connected and very often, problems may come from all these categories at once. We will define the categories later in the article.

From a principle of this problem, this article cannot be complete. There are too many variations and conditions that may be problematic, and it is not possible to cover all of them. Q-DAS team here is trying to point out the ones that are most common from our experience.

Please bear in mind that problems coming to the process from a principle of technology used are here not discussed in detail. The production technologies should be a deep knowledge of engineers at production site. Any analysis of a root cause is in the end on the engineers at production companies. They can rely on their expertise regarding production technology.

We must also address the possibility when the not capable process is actually ok and should not be much of a concern. The last chapter will be dedicated to this topic.

2 Category definition

2.1 Poor MSA

MSA (measurement system analysis) or any gauge acceptance criteria could be a problem when serial process is not capable.

Why? First let’s break down why we do any gauge acceptance:

We have to produce a part. The part is somehow defined by a customer / design engineer / technologist etc… We know what characteristics will be important for quality control on that part and we know the tolerance limits. We also know (roughly) how to measure those characteristics. But what exact technology should be selected? Selecting of technology is decision based on a combination of precision / convenience / price. Most of the time we should be able to make this decision based on information about the part and tolerances. Now we have to prove that our decision is good enough. Usually with some gauge acceptance approach, like any form of MSA.

Now let’s take a look at why poor MSA lead to not capable process. For the explanation here, it does not matter according to which standard the MSA is done. The point is, the problem might come later in the process, when MSA is done (or designed) poorly or not done at all. That would mean that the MSA experiment will not show a potential risk of “bad values” within the measurement or when its not done, engineers forget to think about some specifics of the measurement system, which again leads to “bad values”.

2.2 Meta data associated with parts

The meta data associated with parts can be all sort of information. We might talk here about date/time, production machine, gauge, operator, order number, material number and many more. Why do we keep track about these data? To be able to differentiate produced parts and in case of need to break down different processes into smaller pieces of information. Thanks to that I can easily see that maybe only some specific part of the process is not capable. And I can focus on that part for deeper analysis.

Of course, this usually goes hand in hand with “problems” based on technology. That means that very often we will see not capable process due to (for example) production machine issue, but we need to understand that this issue is there maybe because of typical problems with the technology itself.

So, that means that we just use the meta data association for locating the issue for deeper analysis. It is still an important category to talk about, because it gives the engineers a power to easily figure out the issue.

2.3 Outliers – based on technology

There are many different production technologies. Whether it is about metal stamping or plastic molding, all have different potential issues that has to be controlled and ideally eliminated.

To be able to control the issues, engineers must first understand the whole technology and how it is influenced by process parameters, machine condition, outside conditions and other influential elements.

From my point of view this is the most important category for debate. The reason for it is that it is very fluid and not always predictable. On the other hand, when these problems are detected and under control, it means significant amount of money in the saving (profit).

2.4 Outliers – based on data quality

When we are talking about process capability, outliers will happen. An outlier can be recognized by the statistical test, by plausibility limits or simply by a decision of the user. It can be random or systematic, and in both cases, they impact the process in the negative way.

3 Poor MSA

3.1 Resolution

We define the resolution as smallest possible change in the value recognizable by measuring gauge. During MSA Type 1 or VDA 5 we are taking the resolution into account. It is important to have a measuring gauge that is able to measure the process properly also from this “sensitivity” point of view.

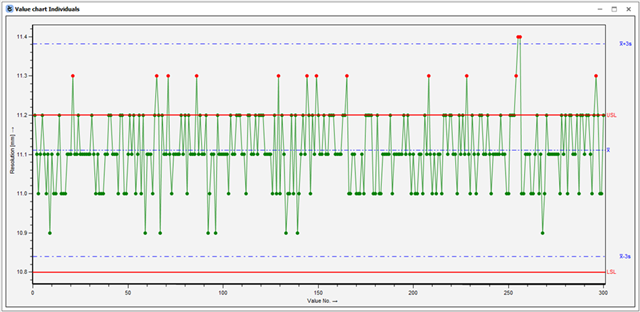

Imagine this part of MSA would be skipped. And then during analysis of the process you will see this:

This process is not capable. The resolution of the gauge is clearly a problem. The measurement system is not able to recognize a change between different parts. It is therefore not possible to actually say if my process is capable or not, because the values are invalid. Different measurement system must be used for this process.

This issue should be recognized during the MSA. When the resolution is not good enough, it will be indicated in the evaluation as percentage or as a high uncertainty in case of VDA 5 study.

3.2 Measurement system not precise enough

If I will oversimplify things, the precision of the measurement gauge is checked during Type 1 study. We basically are testing if our gauge is able to measure the reference value with small enough deviation relative to specification limit. In other words, we are checking if the ration between precision and tolerances is “small” enough. It is kind of an experimental version of the old “metrology golden rule” (not really, this is an oversimplification!).

There are, of course, also different approaches how to determine precision of the gauge relatively to the specification limit of the process that is supposed to be measured. Like uncertainty studies.

A measurement device must be selected for new production process to be measured. For time saving, the golden rule of metrology is applied to selection. That means that there will be a measurement system which has a precision 10 times better then tolerance limits of the process. The precision of the measurement system will be just assumed based on gauge manufacturer data sheets.

In reality, this could work out. Or maybe not. Nobody knows, because it was not proven by experiment. There might be a case where measurement variation is larger than tolerance limits and that would mean that even completely OK parts in the middle of the tolerance could be assessed by the measuring machine as out of tolerance.

As a result, the process might not be capable, but the reason behind is not necessary a bad process, only a bad measuring machine. Different measurement machine has to be selected, and it must be proven that it is capable of measuring such characteristics.

3.3 Operator variation

Operators have influence. This influence can be assed during MSA study. We are usually trying to find out if the variation between different operators isn’t too high. And if it is, we have to find out why. Typically, there are 2 reasons why the variation would be too high:

- Some operators are not trained enough.

- The measurement technology is not repeatable within operators.

First reason is not that big of a deal. There just have to be good enough process behind quality assurance to be sure that operators will get proper training. However, this is not every time provable during an MSA study. Usually not all operators are tested to prove that measurement system is capable. This problem then will transform into “Meta data associated with parts” chapter.

The second reason however is a subject for MSA completely. Well thought MSA study can show whether there is a significant difference between each operator. If that happens to be true, and it’s not a problem of lacking a training, the problem might be a problematic ergonomics of a measurement device. In other words, there is no way how to ensure that every operator will measure the same. That leads to inconsistent values and in a tight tolerance field, this leads to too big of a variation in measurements and process will therefore be assessed as not capable even though the parts might be actually “good”.

If this part of gauge capability study would be skipped, this problem might not be detected, and the not capable process might be incorrectly blamed on production.

Bottom line is that with such huge uncertainty for each measurement, the gauge cannot be used, and different system should be considered.

3.4 Linearity

The measurement system should be able to measure consistently across the tolerance field of the feature and slightly beyond. This can be easily tested during MSA experiments with linearity experiment. It is more or less multiple Type 1 studies on multiple reference parts.

When linearity over tolerance field is not known, there might be a case where during measuring process the system will not correctly measure the parts around tolerance limits, assessing them falsely as OK or NOK. Exactly the same behaviour could happen in the middle of the tolerance field. There OK/NOK would not be an issue, but maybe a large spread of values would lead to not capable analysis. And as in the examples from previous chapters, the issue would not be with the production process, but only bad measurement system that either must be replaced or adjusted.

3.5 Outside conditions

Measurement stations are usually all over the production facility. It is not always in the laboratory with stable conditions. That has to be factored in when measurement system is selected and tested.

Typically, the outside conditions could be a temperature, a draft, vibrations and many others. MSA study must factor all these possible conditions in.

Let’s take a temperature for an example. MSA study is done at the measuring station during the morning shift. Temperature in this place is more or less stable during the experiment and during analysis there is no significance of the temperature. Later in the production process, the analysis say that the process is not capable. During the deeper look in the data, we can see that the problem with measurements comes only in the afternoon shift. The reason is that during the afternoon shift there is a direct sunshine on the measurement station that causes inconsistent and false measurements.

The MSA study is repeated during different shifts with different conditions to prove that direct sunshine is a huge factor. Usually there are 2 choices to overcome this issue. Choose a measurement system that would be “immune” to the outside conditions or get rid of the conditions.

3.6 Design of the gauge analysis

How exactly is the experiment and analysis done matters. It should never be just about “passing with a green report”. It is about choosing the proper design for what I need to do.

Let’s take some high precision measurements as an example. Usually companies choosing between classical MSA (Type 1, Type 2 and Type 3) and VDA 5 (uncertainty study). Choosing the approach according to classical MSA might be easier from the analysis perspective, but it might not give you all the info you need because it does not factor in all the possible conditions and uncertainties. In such case, gauge might seem capable of doing this measurement, but you don’t know the actual uncertainty. During production process you might find out that spread of the values is too high and that you process is not capable. After deeper analysis you might find out that problem is with the measurement system. After you repeat the MSA, but this time according to VDA 5 and factor all the different factors in, you find out that uncertainty is simply too high for this process. More precise and accurate measurement system should be chosen instead.

4 Meta data associated with parts

4.1 Machine / Tool / Cavity / Batch and others

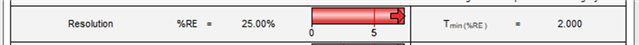

Process is not capable.

In our simple example you can see on the first sight that something is wrong there and that it seems to have a pattern. An engineer must understand their process. He knows what type of meta data they are tracking and for what reason. It does not matter what exactly it is. It can be a machine, a tool, different batches etc… But you might want to differentiate in the analysis.

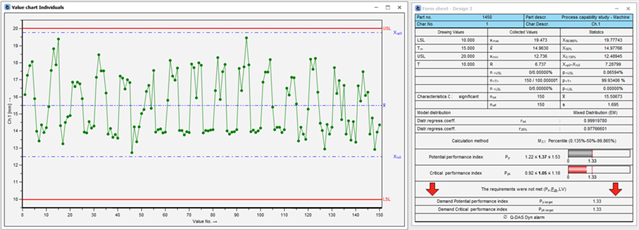

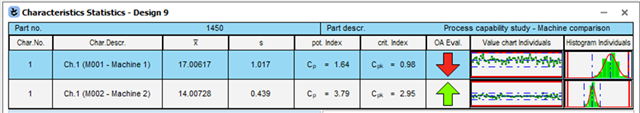

First maybe just graphically. Machine 1 seems to have a problem in production.

To prove it by calculation, it might be good to separate the values into 2 different characteristics based on the machine number.

There is a problem in machine 1. From where exactly this problem is coming from, that is the subject for deeper analysis. Chances are that the problem comes from condition of the machine or typical technology problems that are unique for each production technology.

4.2 Shifts / Operator

In this article we already talked about problems that might be associated with different shifts and operators. We connected this problem either to time/conditions or to training problem. Shift information (time information) and operator information are valid for both problems in the phase of analysis.

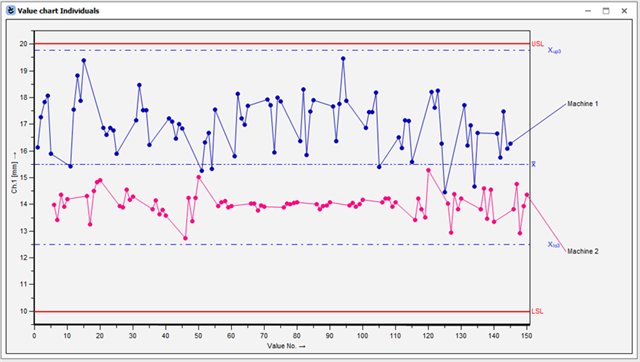

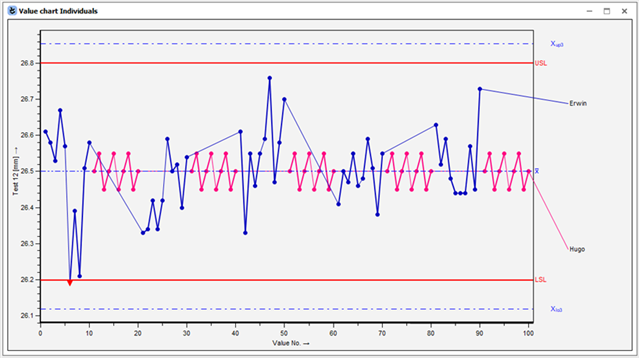

Let’s take now another example. You have not capable process with inconsistent values over the time.

After breaking down the values and showing them based on what operator did the measurement, the problem becomes quite obvious. The manipulation of the data is a big problem. Granted, even if the “pink” operator would measure correctly, the process would still be not capable. But that is beside the point. Manipulation of the data should be fundamentally forbidden because then engineers are losing the information and can’t focus on the real problem. Without accurate data, real problems in the production cannot be detected.

4.3 Process parameters

Process parameters are whatever you consider as factors coming into the production process. It can be collected in a form of proper meta data (in additional data fields level) or as standalone characteristics. Both cases have its own use.

Whether it is in the additional data level or as standalone characteristic, sort of correlation between the measurements and process parameters can be analyzed. We can take for example a mold temperature in injection molding production. The temperature of the mold is a factor that influences multiple different points from quality point of view. For example, a surface finish or geometric stability. With proper controlling for such process parameter, we can easily identify why our process is not capable.

4.4 The intention of reporting procedure

Reporting to internal quality vs reporting to customer could be different. Standards for what is acceptable and what is not acceptable varies not just between processes but also between different customers. Also, different aspects of data could be a factor as well.

Imagine the production of same parts on 2 identical lines. Part from each line is dedicated to different customers. Each line has different criteria for evaluation and analysis. Also, the producer of parts has different and even more strict analysis criteria.

3 reports could be created. 1 for each customer and 1 for internal quality purposes. Technically very similar procedures might have slightly different results, and more importantly, different evaluation in each report. What maybe is acceptable by customers, is not acceptable by producer internal quality, therefore deeper analysis and intervention might be needed.

5 Outliers – based on technology

5.1 Basic considerations

To be able to tell that there is a problem with specific production technology, some necessary steps has to be taken. We are trying to control the process and production based on data we collect. We have to be sure that we can rely on the information hidden in the data.

First be sure process of collection of the data is good enough. That means proper MSA and adopting of SPC principles to notice a change in the process.

Independently from a production technology, we should point out that for every process we have, we should do a study to prove that our “machine” is capable of producing parts within desired specifications. Until you have the proof of stable and reproducible production, no serial process should start. If the machine capability study and a set up of a machine was not done properly, and a serial process is not capable, the answer lies in not understanding machine and process well enough to make adjustments on the spot.

5.2 Definition and handling

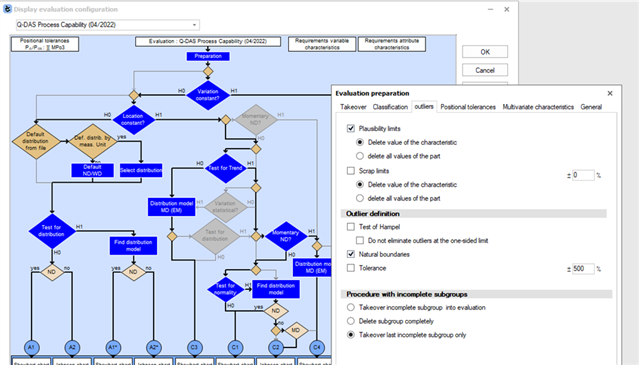

An outlier based on production technology can be define as a value with unexpectedly large deviation. In evaluation strategy we have some options that can help us identify an outlier and perhaps delete it automatically.

We can for example define that any value outside of X% of tolerance is an outlier and that we don’t want to consider this value into evaluation. Or we can run the test of Hampel to find out mathematically if some values are considered an outlier.

Is it a good idea to automatically exclude those values from evaluation? It is not because we are talking about outliers based on production technology. It tells us something. It tells us that there is either random or systematic problem in the process and that we should take care about that problem.

So how to handle such outliers? Identify them and act upon them. Search for a root cause in a production and don’t ignore them.

6 Outliers – based on data quality

Outliers base on data quality are different than the ones we consider as outliers based on production technology. We can define it as an implausible value. When the value truly is implausible, it should not be considered into the evaluation. In Q-DAS software there are some options that can be used to identify and exclude outliers automatically.

For example, each characteristic can have plausibility limits. Based on those limits, outliers can be identified and excluded automatically. Sometimes, when you have a clear definition of what implausible value is, it can be a good idea.

But preferable handling for any type of outlier would be human conscious choice. Identify an outlier, recognize it is implausible and exclude it from evaluation.

How are these implausible values generated? It can be again random or systematic mistake of measurement software, gauge, data transfer and similar glitches in the whole system for data collection. Even here it is preferable to act upon these outliers to try to find out why it occurred and if possible, fix the issue.

Another article in our “How to” section is focusing on dealing with outliers: https://nexus.hexagon.com/community/public/q-das/w/how-to/12836/dealing-with-outliers

7 SPC basics aka what to do with all this

Technically speaking the SPC starts with MSA and ends never. There is a lot of things to be considered and done. And because this sounds complicated, for the sake of the intention of this article, lets break it down and simplify it.

Data collection is a key element of SPC. You have to have data to be able to assess your processes and to quickly react on potential problems. But what data and how? A strategy needs to be well thought out before executing.

I would like to draw a comparison here with general digitalization. In manufacturing industry digitalization is one of the key elements for success. It does not really matter what you are trying to digitize, it usually is important. But it never should be done without clear strategy and goals. It can not be done randomly because then it could create more troubles than solutions. With SPC it’s the same. Do not collect everything. Focus on process that are critical at first. Draw a plan about how to collect the data, when and what to focus on in that data. Have sufficient subgroup to identify the problems.

The SPC have potential to help you with controlling your process in effective and flexible way. Every problem listed in this article, can be detected or avoided before it happens, using SPC.

I am not going to write in this chapter how to start with SPC because we already have a series of articles focusing on this topic. These articles will not substitute actual workshop, but it is a good start. It can be found on our community website under “How to” section: https://nexus.hexagon.com/community/public/q-das/w/how-to/13046/how-to-spc

8 My process is not capable and that is OK

What if you do everything “right” and the process is still not capable from the long-term point of view? Before we answer that question, we should take a look at why we are calculating capability in the first place.

We want to produce parts according to specification with limited amount of faulty parts. What does it mean to produce a part according to specification? It can mean to produce a part to its drawing where geometrical features comply with the tolerance limits. But it can also mean to simply produce a functional part.

Does it always mean that the part is no good when it’s not produced according to tolerance limits from a drawing? The answer is: It depends on the case.

My favorite example is a plastic button. The button is a part of larger assembly where the button should “pop-in” thanks to tiny little plastic pins. The pins are designed to be flexible. There are multiple features that are inspected. Some of them are of course important. Let’s take 2 features. A position of a pin and distance between the pins measured at the end of the pin.

Position of a pin is important. It directly effects the compatibility in the assembly. The distance between pins is important as well but only to some degree. There definitely is a “functional” tolerance limit. Very often with such plastic parts the functional limit is large compared to process spread. On the other hand, the tolerance limit from a drawing is small compared to functional limit. During capability analysis it would then be harder to comply with requirements set up by the customer.

Let’s get back to my original question. What if you do everything right and the process is still not capable? It does not matter as long as you produce functional parts. However, to be constantly “alarmed” by not capable process is not very nice. The solution would be to get back to drawing board and discuss with the customer what tolerance should be used here for capability analysis of such feature.

Disclaimer!!!

Even though this chapter is based on real world experience, it is still oversimplified fabulation. The point of this chapter is to remind everyone to not just blindly follow the quality analysis process but also to think about technical points behind. The same principle should be applied when it comes to requirements on capability indices because 1,33 is not a sacred number.

8.1 Overall evaluation

Another scenario deserves special attention. Imagine you are reporting how is the process behaving in a long run to you customer. In the evaluation there is everything. Every machine, material, process parameters, changes etc… When you break down these data, maybe the process is capable. But when you put it all in one big evaluation, you will see mixed distribution on your data and process can look “funky” and even not capable.

And that is absolutely ok as long as you are able to prove that you control the process in proper way, and you can show a capable process with different time periods and different conditions.

9 Changes over time

Last part of the article has to be dedicated to the changes. Changes happen over time. It is, in most cases, inevitable. Everybody knows this. It is the change in the process / additional data / the conditions / measurement program / DFQ output / Gauge maintenance etc… These changes can be directly responsible for not-capable process. That was basically already covered above.

But even though you strive to control the environment about these changes, it still can create confusion and non-capability by accident. Think about change of the tolerance limits in the drawing. The change will be transferred also to the measurement program. What if this change is not recognized during the data collection and new data is mixed with the old data? That creates inaccuracies in the analysis, and it is confusing at best.

The message from me here is – have a process behind to control the changes. Think about what changes are likely to happen along the way and prepare for them. It will be again 1 less thing to worry about.