An interesting topic is the “resolution”. If many people read this page, one-third will agree, one-third will disagree, and one-third will have never considered this question before. Nevertheless, the author will present his views here.

What is the resolution, and why is it important?

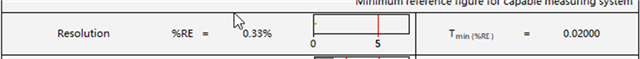

According to the literature, the resolution is the "smallest measurable change." This is important because it is a requirement that must be checked in classic MSA procedures.

But also when considering measurement uncertainty:

However, this statement is not entirely accurate. It is not important that the resolution be checked simply because it is a requirement. Rather, the resolution is checked because it is one of the fundamental components of a measurement system that influences the subsequent evaluation and quality of the measurement.

So, what exactly is the “resolution”?

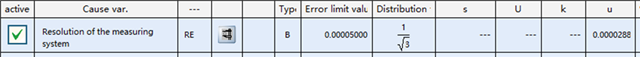

If you consider a handheld measuring device with a digital display, the concept is simple.

2 decimal places. The resolution is 0.01.

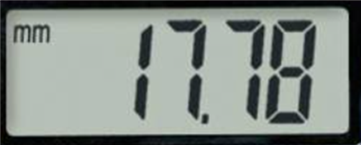

But anyone who has ever seen a characteristic mask in solara.MP will see that there are 2 fields. There is the resolution and then there are decimal places.

2 fields. Looks the same at first... In the case of the calliper, this is also true.

It used to be easy. Now, however, we are turning our attention to measuring machines.

We use measuring device software. We use touch probes or lasers to determine a point cloud of data, which can be obtained through an optical scan or other means. The measuring software then mathematically determines the "measured values" from this point cloud. It's all calculated data. A diameter is not "measured" here. It is calculated from a point cloud according to the Gaussian principle, for example.

What is now the “resolution”?? and why do we have “decimal points”?

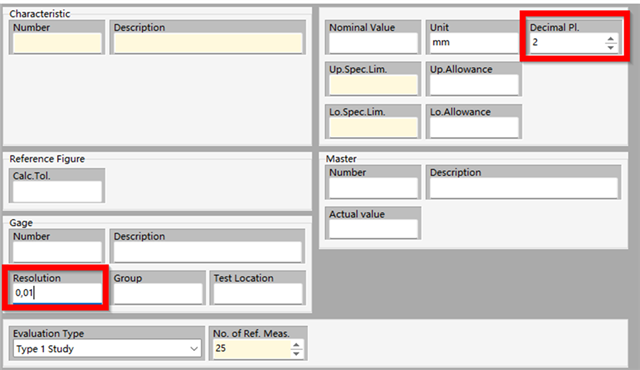

The “calculated” value could be something like this. The data format from Q-DAS for a value has the type “double”

10.1234567890123

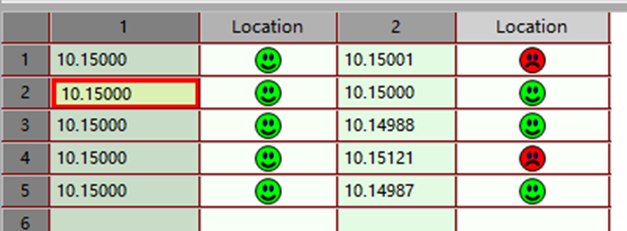

When a software now transfers the value like this, in the dfq-file or in the software the amount of “decimal points” can be defined. For the view. The view only.

We have X values after the comma in the file, but we show just 4 decimal points

And this is important to understand!

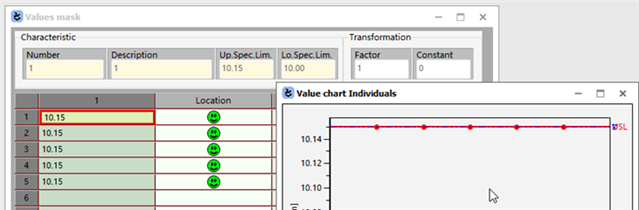

We take a look at those values. All the same. All on the specification limit. And with this, they are all “OK”

Let us take a look at another characteristic. Same values? But 2 are “outside”, bad and 3 are “inside”, green.

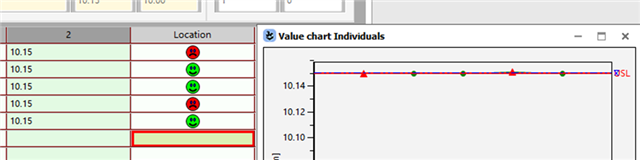

The difference can only be seen, when more decimal points are shown.

Resume: The number of “decimal points” just define the view. The calculation in the background is done with all existing values.

But what is now the “resolution” ?

Well, the world of measurement software manufacturers is split into two camps!

One camp writes all possible decimal places in the data format. However, for viewing in the software, the K field K2022 is used to reduce the display to 4 decimal places:

K0100 1

K1001 Part

K2002/1 Characteristic

K2022/1 4

K0001/1 10.1234567890123

The others are writing the value “already rounded” with the same amount of decimal points, than they defined:

K0100 1

K1001 Part

K2002/1 Characteristic

K2022/1 4

K0001/1 10.1235

And what can we see?

A difference from 10.1234567890123 - 10.1235 = -0.0000432109877

This is an “uncertainty influence”. And the author is aware that we are not talking about the accuracy of the 11th decimal place.

In a very hard resume from the Autor:

If measuring device software reduces the number of decimal places written to the number of decimal places displayed, i.e. rounds the measured value, then the resolution effect is the same as for a calliper and must be taken into account in full as a measurement uncertainty influence.

However, if the measuring device software outputs the value as the maximum writable value and thus only reduces the decimal places in the processing software (whereby the mathematics in the background calculates with the full values), then it could be argued that this measuring device does not have to take resolution into account as an “uncertainty component.”